Introduction

Quantum computing is emerging as a transformative field, promising to tackle computational problems far beyond the reach of today’s classical computers. At the heart of this revolution is the pursuit of quantum advantage – the milestone where a quantum computer definitively outperforms classical machines on a useful task. Achieving quantum advantage is a critical goal for industry leaders and researchers, as it would validate that quantum computers can solve certain problems faster or more efficiently than any classical computer, or even solve problems that are currently impossible to compute in practice . Tech giants like IBM, Google, and Microsoft have all heavily invested in quantum technologies, each vying to be the first to reach this milestone. Their race is not just one of prestige; it carries high stakes for areas such as cryptography, optimization, and drug discovery, where quantum computing could enable breakthroughs unattainable by classical means. IBM, a long-time pioneer in computing, is now positioning itself at the forefront of this quantum race, even as rivals like Google and Microsoft and a host of startups intensify their efforts. In this report, we provide a deeper look at what quantum advantage entails, how IBM and its competitors are approaching the challenge, and why this race could revolutionize industries worldwide.

Understanding Quantum Advantage

In simple terms, quantum advantage refers to the point at which a quantum computer can perform a task that a classical computer cannot practically execute within a reasonable timeframe . It marks the moment when quantum computation isn’t just theoretically faster, but demonstrably superior for a specific problem. IBM defines quantum advantage as running a computation more accurately, cheaply, or efficiently on a quantum system than on any classical system . In other words, it’s when a quantum solution outpaces all known classical algorithms for a given problem. This concept is closely related to what has been called “quantum supremacy,” though many experts prefer “advantage” for its emphasis on practical usefulness and its avoidance of the misleading notion that quantum computers will categorically replace classical ones . Importantly, quantum advantage doesn’t mean quantum computers excel at every task – rather, they outperform classical computers in certain domains or problem instances. For example, quantum devices might efficiently simulate complex quantum systems or optimize certain combinatorial problems that would take classical machines an infeasible amount of time (e.g. millions of years). Achieving this threshold is an essential proof point that the quirky principles of quantum mechanics – superposition, entanglement, and quantum parallelism – can be harnessed for real computational gains.

It’s worth noting that early claims of quantum advantage will likely be narrow and subject to debate. Researchers must rigorously verify that a quantum result is correct and that no improved classical algorithm can match it . In practice, the first demonstrations may involve hybrid approaches where quantum processors work in tandem with classical computers, rather than acting in isolation . Because classical techniques continue to improve as well, any claim of quantum advantage invites scrutiny: the community will attempt to either reproduce the quantum result or find clever classical methods to refute the advantage . Therefore, quantum advantage is not expected to be a single sudden victory, but rather a series of increasingly solid demonstrations over time . Nonetheless, the first clear-cut examples – where a quantum computer solves a problem with unambiguous superiority – will herald a new era in computing. According to IBM’s researchers, this era of practical quantum advantage is on the horizon, with predictions that the first confirmed advantages could be achieved in the next few years .

IBM’s Quantum Computing Drive

IBM has been a driving force in quantum computing research and is determined to be a leader in reaching quantum advantage. The company has laid out an ambitious roadmap that pairs hardware breakthroughs with software and ecosystem development. On the hardware front, IBM has steadily scaled up the size and quality of its quantum processors. In late 2023, IBM unveiled the “Condor” quantum processing unit with 1,121 qubits, the largest count of any quantum chip to date . Alongside it, IBM introduced Quantum System Two, a new modular quantum computer architecture designed to link multiple smaller quantum chips into one system . This modular approach is critical for scaling: instead of building one gigantic, error-prone chip, IBM can interconnect several more manageable and less error-prone chips (such as its 133-qubit “Heron” processors) to act as a larger processor . The ultimate goal of IBM’s roadmap is to deliver a practically useful, fully error-corrected quantum computer by 2029 . In other words, IBM aims to build a fault-tolerant quantum machine within this decade – a bold target that, if met, would likely imply achieving quantum advantage (and beyond) for real-world problems

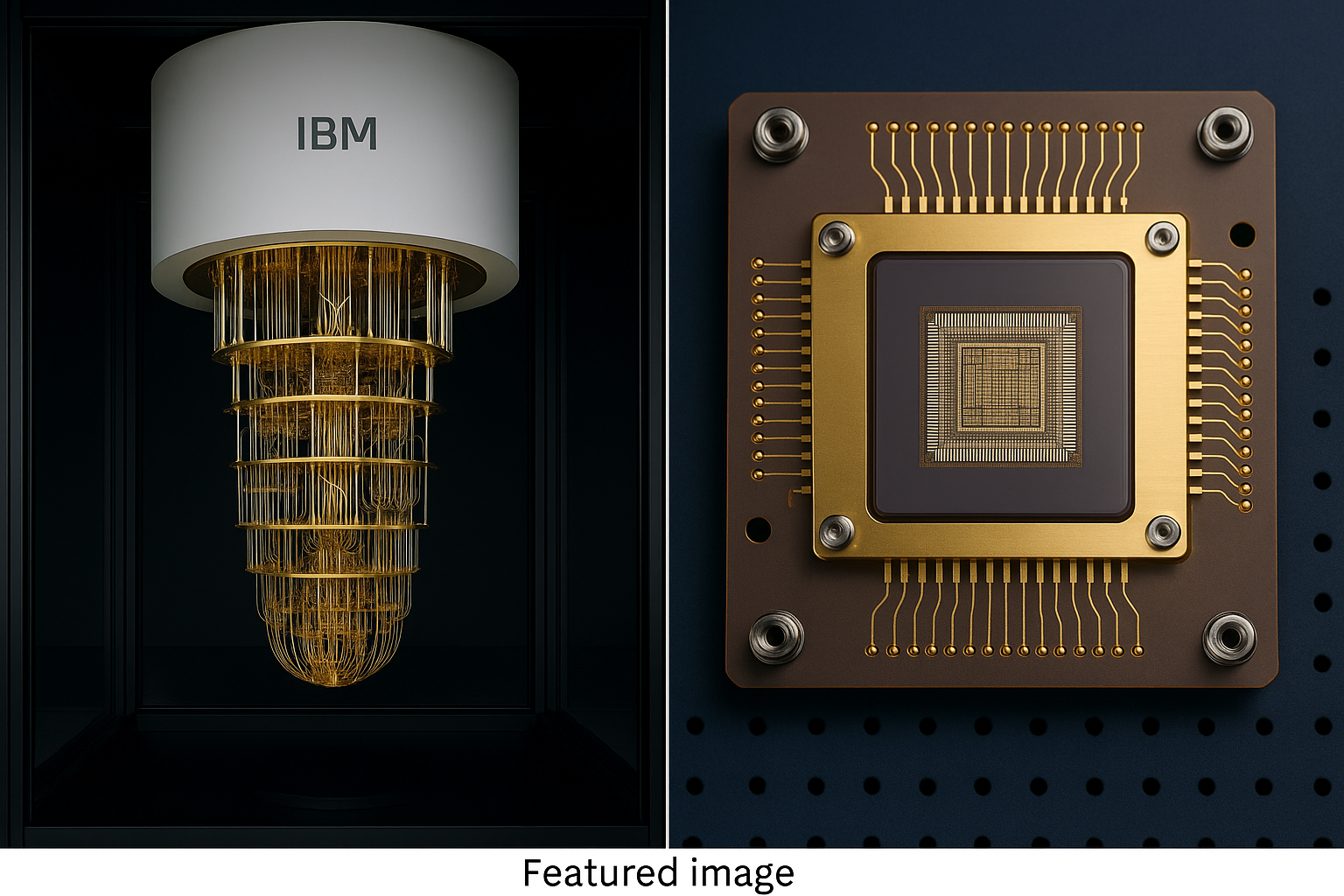

IBM’s Quantum System Two (left) and a 133-qubit Heron quantum processor (right) – key hardware innovations in IBM’s quest for quantum advantage. IBM’s latest quantum processors include the 1,121-qubit “Condor” chip, the largest quantum processor built so far .

The Quantum System Two is a modular system that can link multiple chips, enabling IBM to scale up quantum computers by combining several processors into one functional unit . This modular design, along with improved qubit stability, aims to overcome error rates and scalability challenges on the path toward a fault-tolerant quantum computer.

Hardware alone, however, is only half the battle. IBM recognizes that achieving quantum advantage also requires advancements in quantum software and algorithms . To this end, IBM has invested heavily in developing a robust quantum computing ecosystem. The company’s open-source software framework, Qiskit, has become a widely used toolkit for writing quantum programs. IBM continually updates Qiskit to make quantum programming more accessible, adding higher-level libraries and tools so that developers and domain experts (not just PhDs in physics) can experiment with quantum solutions . Additionally, IBM has cultivated a large community through its IBM Quantum Network, which allows universities, startups, and enterprise partners to access IBM’s quantum processors via the cloud. As of 2024, over 600,000 users and 250 institutions have used IBM’s quantum cloud services for research and development . This collaborative approach is expanding the pool of people exploring quantum algorithms for meaningful problems. IBM even opened dedicated Quantum Data Centers in New York and Germany to support growing usage, and it launched initiatives like the Quantum Innovation Centers (e.g. a new National Quantum Algorithm Center in Illinois) to accelerate algorithm development for practical use cases .

IBM’s strategy under the leadership of quantum research VP Jay Gambetta emphasizes an incremental but steady march toward quantum advantage. Rather than chasing one-off “supremacy stunts,” IBM focuses on useful milestones. In fact, Gambetta has noted that IBM’s quantum systems have already crossed a point of “quantum utility,” where they can perform certain complex computations that are too large to simulate on any classical supercomputer . For instance, in 2023 the average size of quantum circuits run on IBM’s cloud jumped from 13 qubits to over 100 qubits, a scale at which classical simulation becomes infeasible . This indicates that researchers are now using IBM’s quantum machines for experiments that push beyond the reach of brute-force classical computation. However, IBM views this as an intermediate step. The true goal is quantum advantage, defined by Gambetta as doing “something you can’t do with a classical computer” outright . To get there, IBM is tackling the hardest challenge in quantum computing: error correction. Today’s quantum bits (qubits) are notoriously error-prone, losing their quantum state (through decoherence or noise) in fractions of a second. Overcoming this requires encoding a logical (error-free) qubit across many physical qubits with clever error-correcting codes. IBM is researching new error correction schemes that are more hardware-efficient than the traditional “surface codes.” Notably, IBM is exploring low-density parity-check codes that could achieve fault tolerance with far fewer physical qubits by leveraging a modular, linked-chip design . Gambetta expressed confidence that by 2029 IBM will have implemented error correction, in line with their roadmap for a working fault-tolerant machine . If successful, IBM’s combination of scaled hardware, robust software, and error correction will likely yield one of the first clear demonstrations of quantum advantage on a real-world problem.

Google and Microsoft: Competing Approaches

IBM is not alone in the quest for quantum advantage. Google and Microsoft, among others, are formidable competitors, each pursuing different technological approaches to reach the same goal. Google in particular made headlines in 2019 when it announced it had achieved “quantum supremacy.” Using a 53-qubit processor called Sycamore, Google performed a carefully chosen calculation (random circuit sampling) in about 200 seconds – a task they estimated would take a classical supercomputer 10,000 years to reproduce . This announcement, published in Nature, was a historic moment: it marked the first time a quantum computer reportedly solved a problem exponentially faster than a classical one. However, the result spurred debate. IBM researchers argued that with clever algorithms and optimal use of disk storage, a classical supercomputer could actually simulate the same task in as little as 2.5 days, not millennia . By IBM’s stricter definition, Google’s demonstration hadn’t truly hit the “cannot be done classically” bar . Nonetheless, Google’s experiment was a significant scientific achievement, showcasing the power of quantum computation on a specific problem. More importantly, it galvanized the field to pursue useful quantum advantage, not just supremacy demonstrations on contrived tasks.

Since 2019, Google has continued to advance its quantum hardware and address the biggest shortcomings: qubit errors and scalability. In late 2024, Google unveiled a new quantum processor code-named Willow. This chip set records in a benchmark test and notably demonstrated a promising approach to error correction. In one experiment, Willow completed a random circuit sampling problem in under 5 minutes, a task projected to take the fastest classical supercomputers an astronomically long time (on the order of 10^25 years, far longer than the age of the universe) . While that specific problem has no direct practical use, it underscores the raw computational lead a quantum device can achieve under the right conditions. Even more crucial was Google’s progress in “below-threshold” error correction: as they increased the number of qubits in certain error-correcting configurations, the overall error rate decreased exponentially (instead of increasing), indicating that adding qubits was actually improving reliability . This result, achieved with a technique using surface codes, suggests Google is on track toward scalable, fault-tolerant quantum computing. Google’s researchers have also been exploring hybrid quantum-classical algorithms and even the intersection of quantum computing with AI and machine learning , hoping to find advantageous applications in areas like complex optimization and data analysis. All these efforts position Google as a leading contender; its strategy often emphasizes high-profile experimental milestones and rapid hardware improvements (e.g. increasing qubit count and fidelity), backed by strong theoretical research in algorithms and error correction.

Microsoft, by contrast, has taken a more unique and long-horizon approach in the quantum race. Rather than using the superconducting qubits that IBM and Google employ, Microsoft has heavily invested in developing topological qubits – a fundamentally different type of quantum bit that promises inherent stability against errors. For nearly two decades, Microsoft’s quantum research group (including Station Q) has pursued elusive quasiparticles called Majorana zero modes, which could form the basis of these topological qubits. In 2023, Microsoft claimed a major breakthrough: evidence that they successfully created and controlled a topological qubit in a device dubbed “Majorana 1,” which contains 8 qubits built from Majorana-based structures . The topological design is touted as a potential game-changer because a single topological qubit can store quantum information in a distributed, non-local way that naturally protects it from certain errors . In theory, this means far fewer physical qubits would be needed to make a robust logical qubit, bypassing some of the enormous overhead faced by other approaches. Microsoft’s roadmap envisions that if these topological qubits can be scaled up, they could leap to a million-qubit quantum computer much faster than other technologies – even suggesting the ambition of a million error-corrected qubits within a few years . This would be an astonishing leap, potentially enabling true quantum advantage for a wide range of problems. However, it’s important to note that Microsoft’s claims are still being vetted by the scientific community , and as of 2025 the company has not yet demonstrated a large-scale quantum computation on par with Google or IBM’s devices. Microsoft’s approach is essentially a high-risk, high-reward bet: spend years developing a superior qubit technology that could eventually surpass the scalability and reliability of the more established superconducting or trapped-ion qubits. In the meantime, Microsoft is also active in quantum software, offering the Azure Quantum cloud platform and working on quantum programming tools. It has partnerships to allow researchers to run small quantum experiments or simulations via Azure, ensuring Microsoft stays relevant in the community as it works toward a potentially revolutionary hardware platform.

Beyond these three giants, the broader quantum computing ecosystem includes numerous startups and national research programs. Companies like IonQ and Quantinuum (which use trapped-ion qubits), Rigetti Computing (superconducting qubits), and D-Wave Systems (quantum annealing) are all pushing innovative quantum architectures. These startups, often backed by venture capital and government grants, contribute fresh ideas and sometimes specialize in certain applications or technologies. At the same time, governments around the world have made quantum technology a strategic priority. China, for example, has invested heavily in quantum research (including record-setting photonic quantum experiments), and the European Union has a large Quantum Flagship program. The result is a truly global race: the United States, Europe, China, and others are competing and collaborating in equal measure to claim leadership in the quantum era . Academia also remains a key player, with university labs producing many of the breakthroughs in quantum algorithms and physics. This mix of tech giants, startups, and public sector initiatives accelerates progress. Each player brings different strengths – whether it’s IBM’s comprehensive approach, Google’s bold experiments, Microsoft’s novel qubit design, or startups’ agility – and all are contributing to the rapid pace of innovation. The competition is intense, but it’s also driving collaboration in setting standards, training talent, and solving common challenges in quantum computing.

Implications and Applications of Quantum Advantage

Why does quantum advantage matter? The reason so many organizations are racing to build quantum computers is the transformative impact these machines could have across various industries and scientific fields. If quantum computers can outperform classical ones for certain tasks, even by a small margin, it could enable solutions to problems that today remain intractable. Here we discuss a few key areas – cryptography, optimization, and drug discovery – where quantum advantage would be especially significant:

- Cryptography and Cybersecurity: Perhaps the most high-profile implication of quantum computing is its effect on encryption. Many of the encryption schemes securing our digital world (including RSA and ECC) rely on problems like large number factoring or discrete logarithms, which are effectively impossible for classical computers to solve in reasonable time. However, quantum algorithms such as Shor’s algorithm could factor large numbers exponentially faster than classical algorithms, meaning a powerful quantum computer could break RSA encryption in a matter of hours or days. A fully realized, error-corrected quantum computer poses a potential threat to all current secure communications – it could “obliterate current data-encryption algorithms,” rendering encrypted data readable . This looming threat has spurred a race in its own right to develop quantum-resistant (post-quantum) cryptography. In 2022, the U.S. National Institute of Standards and Technology (NIST) began standardizing new cryptographic algorithms designed to withstand attacks from quantum computers . Until large quantum computers materialize, these new protocols will run on classical machines but offer security against future quantum capabilities. On the flip side, quantum advantage also opens new defensive possibilities: quantum computers can generate true random numbers for cryptographic keys and may enable new encryption methods based on quantum physics. Tech companies and governments are already working on quantum-enhanced security techniques, as well as planning the migration to post-quantum cryptography in anticipation of future quantum breakthroughs . In summary, quantum advantage in cryptography forces an overhaul of our security infrastructure but also provides tools to build next-generation security – making it a double-edged sword that is taken very seriously by national security agencies worldwide.

- Optimization and Finance: A broad class of problems across business and science boils down to finding optimal solutions among an astronomically large set of possibilities – something classical computers often struggle with as problem size grows. Quantum computers have the potential to tackle certain optimization problems much more efficiently, through algorithms like Grover’s search (for unstructured search problems) or quantum approximate optimization algorithms (QAOA) for combinatorial optimization. Achieving quantum advantage here could revolutionize industries like finance, logistics, and manufacturing. For example, in finance, quantum algorithms are being explored for portfolio optimization, risk analysis, and fraud detection, aiming to crunch complex risk-return calculations faster than classical methods . Early tests indicate promising results in using small quantum processors to solve simplified portfolio optimization problems, potentially leading to better investment strategies . In logistics and supply chain management, companies face challenges in routing, scheduling, and resource allocation that are computationally hard (NP-complete or NP-hard problems). Quantum computers, even in a hybrid setup, could find more efficient routes or schedules that save time and cost, as these are natural fits for quantum’s ability to explore many possibilities in parallel . Airlines, shipping companies, and ride-sharing services are among those eyeing quantum optimization to enhance operations. Another emerging area is the intersection of quantum computing and machine learning – sometimes dubbed quantum machine learning – which could improve pattern recognition or regression tasks; this area is nascent but being intensely researched by Google and others . While practical quantum advantage in optimization hasn’t been demonstrated yet, the economic incentive is huge. Even a modest advantage could translate to significant savings or gains when applied at scale in financial markets or global supply chains.

- Drug Discovery and Materials Science: Quantum advantage could be a game-changer in chemistry, pharmaceuticals, and materials engineering. These fields often require understanding the behavior of molecules and materials at the quantum level – a task that grows exponentially complex with the number of particles involved. Classical computers struggle to simulate quantum systems beyond a certain size because the computational cost explodes. Quantum computers are inherently suited to simulating other quantum systems, as Feynman envisioned in the 1980s. A quantum computer reaching advantage in this domain could accurately model molecular interactions for complex chemicals, something impossible for today’s supercomputers. In drug discovery, this means the ability to simulate how a drug molecule binds to a target protein or to explore the properties of novel compounds without needing exhaustive lab experiments. For instance, researchers at the Cleveland Clinic and other institutions are now using IBM’s quantum hardware to calculate small molecular energies and reaction dynamics, inching toward the kind of simulation that could streamline pharmaceutical development . In the near future, a quantum computer might identify promising drug candidates or optimal chemical catalysts in a fraction of the time it takes now, potentially revolutionizing pharmaceutical R&D timelines . Similarly, in materials science, quantum simulations could lead to the discovery of new materials (for batteries, superconductors, etc.) by enabling scientists to probe quantum properties directly. Even before full-scale quantum advantage is reached, we are seeing quantum computers used as co-processors to tackle parts of these problems – for example, calculating the energy states of a molecule while a classical computer guides the overall search. As hardware and algorithms improve, the hope is to solve problems like designing a complex drug or material entirely on a quantum computer, which would be a landmark achievement for science and industry.

In addition to these areas, quantum advantage could impact numerous other fields: from enabling more precise climate and weather models, to improving artificial intelligence through faster linear algebra computations, to enhancing secure communications via quantum networks. The allure of quantum computing is that it offers a fundamentally new tool – a way to harness nature’s most basic rules for information processing. Industries that rely on heavy computation stand to benefit, which is why companies in sectors as diverse as automotive, aerospace, energy, and telecommunications are monitoring quantum developments closely. For now, many of these applications remain theoretical or at proof-of-concept stages. But if and when quantum advantage is clearly demonstrated, we can expect a surge in efforts to commercialize quantum solutions for real-world use.

Challenges on the Path to Quantum Advantage

For all the excitement around quantum computing, it’s important to underscore that reaching quantum advantage – and ultimately building large-scale quantum computers – is an extraordinarily difficult endeavor. The race has made impressive progress, but significant challenges remain before quantum computers can routinely outperform classical ones in practical tasks. Some of the key challenges include:

- Qubit Quality and Error Rates: Today’s qubits are highly error-prone. Environmental noise, imperfect control pulses, and fundamental quantum decoherence all contribute to errors in quantum operations. Unlike classical bits, qubits cannot be perfectly copied or error-checked without disturbing their state. Even the best quantum hardware has error rates on the order of 0.1%–1% per operation, which sounds small but becomes prohibitive as computations involve hundreds or thousands of operations. These errors cause results to become unreliable long before a computation of interesting size can finish. Overcoming this requires either significantly improving the physical qubit fidelity or implementing robust error correction. Error correction itself is a monumental challenge: it takes many physical qubits to redundantly encode a single logical qubit that can self-correct errors. Surface code schemes, for example, might require dozens or even hundreds of physical qubits per logical qubit, meaning a million physical qubits might be needed to do anything truly revolutionary (since Shor’s algorithm to break RSA could require thousands of logical qubits). IBM’s and Google’s current devices, with tens or a few hundred qubits, are far from that scale, but they are actively working on demonstrations of small error-corrected logical qubits. The recent success by Google in showing improved error rates by scaling up the number of qubits in an error-correcting code is a notable step . Likewise, IBM’s research into more efficient codes aims to reduce the overhead required . Still, achieving fully error-corrected, long computations is likely a years-long effort, and without it, quantum computations must remain short and tailored to minimize errors (using techniques called error mitigation rather than full correction).

- Scalability and Engineering: Beyond the qubits themselves, building a quantum computer that can outperform classical supercomputers demands integrating a huge number of components with precision. Superconducting qubit systems (like those of IBM and Google) must operate at extremely low temperatures (millikelvin levels) in dilution refrigerators. Scaling from 50 qubits to 1,000 qubits, and then to a million, is an immense engineering challenge. More qubits mean more control electronics, more microwave wiring, and more potential points of failure. IBM’s approach with modular Quantum System Two addresses some scaling issues by linking separate modules, but that introduces complexity in ensuring qubits in different modules can still interact as needed. Other platforms like trapped ions face their own scaling hurdles (e.g., how to trap and manipulate many ions simultaneously). As devices grow, issues of crosstalk (qubits influencing each other unintentionally), calibration, and fabrication yield of quantum chips become critical. There’s also a bottleneck in input/output – feeding in control signals and reading out measurements from millions of qubits is not feasible with today’s techniques. New engineering solutions such as cryogenic control chips (like Microsoft’s use of a cryo-CMOS controller in its design ) and novel packaging will be required. In short, going from laboratory experiments to a full-blown quantum supercomputer is akin to the leap from the first transistors to modern CPUs – it will require innovation at every level of system design. This is a marathon, not a sprint, and it’s why tech giants have decadal plans for quantum.

- Software and Algorithm Gaps: Another challenge is the relative paucity of quantum algorithms that are known to offer a dramatic speedup for practical problems. Shor’s algorithm (for factoring) and a few others like Grover’s (for unstructured search) are famous, but they are just the tip of the iceberg. Achieving quantum advantage might come from discovering new algorithms or new ways to apply quantum computers in hybrid workflows. Researchers are actively developing algorithms for variational quantum eigensolvers, quantum machine learning, optimization (QAOA), and sampling problems that play to current quantum hardware’s strengths. However, many of these algorithms are heuristic and it’s uncertain how well they will scale. Moreover, programming quantum computers remains a specialized skill – it requires understanding quantum mechanics and thinking in terms of probabilities and superpositions. There is a need for better software abstractions, compilers, and possibly entirely new programming paradigms to make quantum advantage accessible to ordinary developers. IBM’s efforts with Qiskit and high-level libraries, as well as Google’s Cirq and Microsoft’s Q# language, are aimed at bridging this gap, but it remains a significant hurdle. We might need “quantum-savvy” algorithms co-developed with domain experts to truly make use of quantum advantage once hardware is capable. Until then, a quantum computer might achieve advantage on paper, but leveraging it for real-world impact could require substantial additional innovation in software and theory.

- Verification and Benchmarking: How do we know a quantum computer’s answer is correct if a classical computer cannot feasibly verify it? This meta-challenge will arise at the moment of quantum advantage. In the interim, researchers use smaller instances of problems (that classical computers can solve) to test and validate quantum routines. But when a quantum machine tackles a problem beyond classical reach, new methods are needed to build trust in the result. This could include statistical tests, cross-validation by different quantum techniques, or checking consistency with theoretical predictions. Establishing benchmark problems that can showcase quantum advantage while still being checkable in some way is an active area of research . The community is also considering standards for claiming advantage – for instance, requiring that results be reproducible on independent devices or that they solve a problem of recognized importance. As quantum computing transitions from lab experimentation to practical application, developing reliable metrics and validation techniques will be crucial so that users can trust quantum-enabled solutions.

In summary, the road to quantum advantage is challenging, but not insurmountable. Each of these obstacles – qubit errors, scaling, algorithm development, and verification – is the focus of intense research efforts worldwide. Progress is being made on all fronts: qubit fidelities improve each year, engineers are devising scalable architectures, new algorithms are published every month, and benchmarking methods are being discussed in the quantum community. It’s this combination of physics, engineering, and computer science challenges that makes the quantum computing field both extremely hard and extremely exciting. The race to overcome these challenges is as important as the race between companies – in fact, many breakthroughs (like better error-correcting codes or cryogenic controllers) are quickly shared across the field, benefiting all players. Ultimately, solving these technical hurdles will determine when we truly reach a robust quantum advantage.

Outlook and Conclusion

As of 2025, we stand at the cusp of quantum advantage. IBM, Google, Microsoft, and others have built prototypes that tantalize with computational feats just beyond classical reach. IBM has demonstrated “quantum utility” and charts a clear path toward a fault-tolerant machine by 2029 . Google has showcased rapid progress in raw quantum computational power and error reduction . Microsoft’s long-term bet on topological qubits aims to crack the toughest part of the problem – qubit quality – in one bold stroke . Venture capital and government funding continue to pour into the quantum sector, fueling a pace of innovation that would have been hard to imagine a decade ago. Experts offer varying timelines for widespread quantum computing: some optimists see specialized quantum advantage happening in the next 2-5 years, with broader commercial use in 5-10 years, whereas others caution it might take a decade or more to fully realize quantum computing’s potential . However, there is a growing consensus that the first clear quantum advantage for a practical problem will likely be achieved within this decade. In fact, IBM researchers predict that by 2026 the community will probably accept the first instances of true quantum advantage – perhaps in fields like quantum chemistry or optimization, where even a small quantum edge is valuable.

What happens when that milestone is reached? In the immediate term, a verified quantum advantage will be a scientific triumph, on par with historic achievements in computing. It will validate decades of research and justify the enormous investments made. We can expect an acceleration of interest and funding – a bit of a “quantum gold rush” – as industries seek to be early adopters of the new capability. The companies leading the race (IBM, Google, Microsoft, etc.) will likely offer cloud-based quantum services that clients can use, and those services will boast tasks that no classical cloud can do. This will also spark important discussions around ethics, security, and policy. For example, if a quantum advantage breaks cryptographic protocols, governments will need to respond swiftly to protect sensitive data. International competition could heighten if one country or company pulls ahead significantly in quantum technology, raising concerns about monopolies or strategic dominance. At the same time, the collaborative nature of the field – with academic conferences and cross-company research papers – suggests that breakthroughs will diffuse relatively quickly. It’s notable that IBM, Google, and others often publish their quantum advances openly. This mix of competition and cooperation means that when quantum advantage is achieved, it’s likely to be replicated and built upon by others in short order .

In the longer term, quantum advantage is just a waypoint. The ultimate vision is fault-tolerant quantum computing at scale: machines that can run any quantum algorithm (however long) reliably and integrate seamlessly with classical computing infrastructure. In that future – perhaps a decade or two away – quantum computers might be commonplace in data centers, working alongside classical supercomputers to deliver insights on complex problems. We might talk about quantum co-processors much like GPUs today accelerate certain tasks. Reaching that stage will likely revolutionize technology and society in ways we can only partially foresee, from discovering new physics to optimizing global systems. But it all begins with that first demonstration that yes, a quantum computer can do something profound that no ordinary computer can.

As of now, IBM appears poised to claim such a milestone, given its steady advancements and holistic strategy. With its latest progress in both hardware and software, IBM is optimistic about achieving quantum advantage before its rivals, a development that could “potentially revolutionize industries worldwide,” to quote one assessment. Yet, whether IBM reaches it first or a competitor does, the dawn of quantum advantage will mark the start of a new chapter in computing. Just as the advent of classical supercomputers changed the world, so too will the coming quantum computers – ushering in capabilities that were once purely science fiction. The race continues, but the finish line for this critical first goal is finally in sight. And once that line is crossed, we will enter a new era where quantum machines begin to deliver on their vast promise, solving problems previously thought unsolvable and transforming how we approach technology and innovation.

Sources: The information in this article is based on the latest reports and expert insights on quantum computing as of 2024–2025. Key references include IBM’s quantum research blog on the definition and roadmap of quantum advantage , a Fast Company interview detailing IBM’s hardware milestones and strategy , reports on Google’s quantum supremacy and error-correction experiments , Microsoft’s announcement of topological qubit development , and analysis of emerging quantum applications in cryptography, finance, and drug discovery . These and other sources are cited throughout the text to provide a fact-based, up-to-date picture of the quantum computing landscape..

Discover more from RETHINK! SEEK THE BRIGHT SIDE

Subscribe to get the latest posts sent to your email.