Sound and Musical Instruments: A Literary and Neuroscientific Exploration

Prologue: The Woman Who Heard Herself Heal

When she first arrived at the rehabilitation clinic, Anna could hardly speak. A mild left-hemisphere stroke had stolen her words, leaving her sentences fragmented and her right hand trembling. Before that morning, she had been a music teacher for thirty years — a woman whose world was built on tone and rhythm. She used to say that “a melody is just a thought with wings.” Now, that melody was gone.

Her neurologist followed the usual path — medication, physiotherapy, and speech exercises. Progress was painfully slow. Anna avoided mirrors and conversation; she lived inside silence. Then one day, a young therapist rolled an upright piano into her therapy room. It was old, slightly out of tune, and its wooden surface bore the marks of many hands.

At first, Anna sat motionless. The therapist played a few bars of Schumann’s Träumerei. Something flickered across Anna’s face — recognition, perhaps memory. Her left hand twitched, reaching for a phantom keyboard. The next day, she tried pressing a few notes herself. A week later, she was humming short phrases. By the third week, she was playing scales.

The turning point came when the therapist asked her to sing the words she could not speak. Instead of saying “Good morning,” she sang it — clumsy at first, but audible. Over the following months, this musical scaffolding became her bridge back to language. She played and sang every day, retraining her mouth, her memory, and her mind.

Functional MRI scans later showed remarkable findings: the right hemisphere’s auditory and motor areas had reorganized to take over some of the speech functions lost to the stroke. Her dopamine and serotonin levels improved; her stress hormone cortisol fell measurably during her sessions. What medication and repetition alone had failed to do, music accomplished through emotion and rhythm.

In the published case report from Frontiers in Neurology, the clinicians described her recovery as “a living model of melodic intonation therapy.” Yet beyond data and scans was something no graph could capture: when she played, she smiled again. Each note was an act of remembering — not only how to move or speak, but how to be.

Anna’s story is not unique. Similar recoveries have been documented in patients with Parkinson’s disease walking more fluidly to rhythm, or those with Alzheimer’s recognizing forgotten faces when familiar songs play. These stories blur the boundary between art and physiology, showing how music rewires the brain — not metaphorically, but literally.

As a scientist, a longevity medicine certified expert, and explorer in neuroscience, I have studied these patterns on brain scans; as a writer, I have felt them in human stories. Music is not merely sound — it is a force that changes the architecture of emotion and memory.

This essay is about that force. It explores how sound, a simple vibration of air molecules, becomes thought, movement, and meaning. We will begin with the physics of sound — the invisible threads from which music is woven — and follow their journey through instruments, minds, and cultures, from the laboratories of modern neuroscience to the ancient philosophies of East and West.

⸻

The Physics of Sound

At its most fundamental level, sound is a physical event — a mechanical wave traveling through a medium. When we hear a note from a violin, a voice, or even the hum of a distant refrigerator, what reaches our ears are tiny fluctuations in air pressure, pulsing at precise frequencies.

Sound is defined scientifically as “a mechanical disturbance traveling through an elastic medium.” That means any substance capable of vibrating and returning to its original shape — air, water, metal, or wood — can carry sound. Strike a drum, and its stretched membrane pushes and pulls the surrounding air molecules; they, in turn, jostle their neighbors, creating ripples of compression and rarefaction. These ripples radiate outward as a sound wave.

If you could see sound plotted on a graph, it would appear as a series of smooth oscillations — a sine wave. Each wave cycle consists of a crest (compression) and a trough (rarefaction), and the number of these cycles per second determines frequency, measured in hertz (Hz).

Frequency and Pitch

Frequency corresponds directly to pitch — the highness or lowness of a sound. A flute’s delicate tone may vibrate at 2,000 Hz, while a bass drum might rumble at 50 Hz. Humans typically hear frequencies between 20 and 20,000 Hz, though this range narrows with age.

High frequencies have short wavelengths; low frequencies, long ones. That’s why deep sounds seem to travel farther and resonate through walls — their waves are literally longer and more powerful. In music, frequency defines the note, but it is the interplay of frequencies that creates harmony, chords, and overtones.

Amplitude and Loudness

Amplitude measures the wave’s strength — how far air molecules are pushed from their resting positions. Greater amplitude means louder sound. This intensity is measured in decibels (dB), a logarithmic scale. A whisper might register at 30 dB; normal conversation at 60 dB; a thunderclap around 120 dB, which approaches the threshold of pain.

Yet loudness is not purely physical. The brain interprets sound intensity subjectively — influenced by frequency, duration, and even emotion. A lullaby sung softly by a loved one can feel more powerful than an explosion heard from afar.

Timbre and Texture

If frequency and amplitude determine what we hear, timbre determines how we perceive it. It is timbre — the harmonic texture or color of sound — that lets us distinguish a violin from a flute playing the same note at the same volume.

Scientifically, timbre arises from the mix of overtones — frequencies that accompany the main pitch. Each instrument produces its own harmonic signature. A clarinet emphasizes odd-numbered harmonics, giving it a woody tone; a violin’s bowed string generates a dense spectrum, rich and bright.

The envelope — how a sound begins, sustains, and fades (attack, decay, sustain, release) — also shapes its identity. A piano’s sharp hammer strike and quick decay make it percussive; a violin’s slow swell gives it a singing quality. These nuances explain why two instruments can play the same note and still evoke entirely different emotions.

Velocity and Medium

Sound’s speed varies with its medium. In air at room temperature, it travels at about 343 meters per second, in water at 1,500 m/s, and through steel at over 5,000 m/s. This is why underwater sounds reach us so quickly and why solid objects conduct sound efficiently — think of pressing your ear to a railway track to detect a distant train.

This finite velocity also produces familiar phenomena such as echoes and the Doppler effect, where the pitch of an ambulance siren seems higher as it approaches and lower as it moves away — a direct consequence of wave compression and expansion relative to motion.

⸻

Sound, in essence, is vibration made visible to the mind. Anna’s recovery was built upon these very principles — frequency activating motor circuits, rhythm entraining her basal ganglia, harmonic resonance stimulating the brain’s emotional centers.

What we call music is nothing more and nothing less than organized vibration, and yet from that simple foundation arises everything from Mozart’s symphonies to a mother’s lullaby.

Let us now turn from pure physics to the human ingenuity that transforms vibration into art — to the musical instruments through which we shape sound into meaning.

Sound and Musical Instruments: A Literary and Neuroscientific Exploration

⸻

Classification and Structure of Musical Instruments

Every instrument is a sculpted bridge between physics and emotion. The earliest human flutes, carved from bird bones forty thousand years ago, carried the same principle as a modern violin or digital synthesizer: make matter vibrate, and let it speak. Across continents and centuries, people learned to coax sound from wood, stone, skin, and air — not by accident, but by a deep intuition of nature’s laws.

Scientists classify instruments today by how those vibrations are produced, rather than by culture or shape. The Hornbostel–Sachs system remains the most comprehensive framework:

- Idiophones create sound from their own substance vibrating — like a bell, cymbal, or xylophone bar. Strike them, and the material itself sings.

- Membranophones use a stretched surface — a drumhead — to generate vibration. Every heartbeat is nature’s own drum.

- Chordophones rely on stretched strings: violins, guitars, harps, and pianos. Their voices come from tension, length, and resonance.

- Aerophones use vibrating air columns, as in flutes, trumpets, or the human voice.

- Electrophones, a modern invention, generate or amplify sound electrically — the synthesizer, the electric guitar, the theremin.

Though this classification is global, every culture adds its poetic variation. Ancient India’s Natya Shastra divided instruments into tat (strings), susira (winds), avanaddha (drums), and ghana (solid percussion). Ancient China arranged them by material: silk, bamboo, wood, metal, clay, skin, gourd, and stone — an entire orchestra symbolizing harmony between heaven and earth.

A violin, for instance, works like a precision physics experiment: friction from the bow makes the string vibrate; the bridge transmits that vibration into a hollow wooden chamber; the air inside resonates, amplifying the tone. When a skilled player varies bow pressure or finger placement, she is manipulating wave amplitude and frequency at will — turning mathematics into feeling.

A flute is a study in air dynamics. The musician splits the air stream across the lip of the mouthpiece, creating turbulence that oscillates at frequencies determined by the tube’s length and openings. When holes are covered, the effective length changes, altering pitch. The same principle applies to organ pipes and even a child blowing across a bottle’s rim — a continuum from folk play to cathedral grandeur.

Even percussion — often dismissed as “mere rhythm” — demonstrates remarkable complexity. The tabla of India, with its intricate finger strokes, can produce over thirty distinct tones from a single drumhead. The timpani in Western orchestras change pitch by tightening or loosening their membranes. Each demonstrates how mechanical vibration transforms into musical voice.

What is fascinating, from a neuroscientific view, is that the human auditory system can discriminate differences as minute as two hertz in pitch, or a few milliseconds in timing. We are not only listeners but precision instruments ourselves — biological oscillators tuned to the subtleties of our own creations.

⸻

The Emotional, Cognitive, and Physiological Effects of Music on the Brain

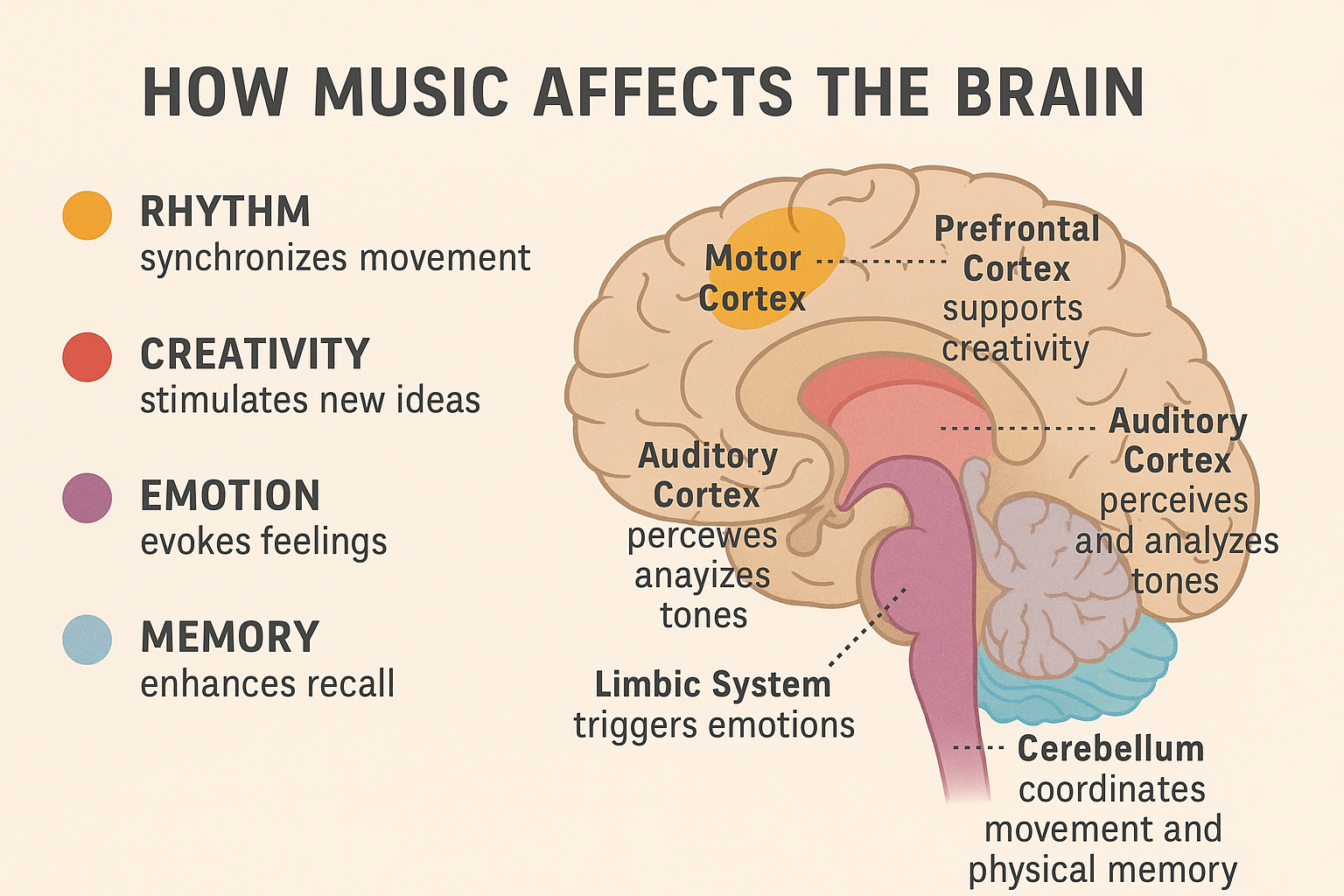

Every musical tone that enters the ear continues its journey deep into the brain. From the eardrum to the cochlea, and onward to the auditory cortex, music unfolds across neural landscapes that govern motion, memory, and emotion.

1. Music and Emotion

The emotional power of music is not metaphorical. Studies using fMRI reveal that listening to moving music activates the limbic system — including the amygdala, hippocampus, and nucleus accumbens — the same regions engaged by food, sex, and social bonding. In a landmark study at McGill University, researchers observed spikes of dopamine release in the nucleus accumbens when listeners reached the peak “chill” moments in their favorite songs.

The reason music can bring tears or joy lies in anticipation and resolution. When melody and rhythm play with our expectations — delaying a cadence, resolving a dissonance — the brain’s predictive circuits light up. These neural “plot twists” are rewarded with dopamine bursts, much like a satisfying narrative ending.

Rhythm itself affects physiology. The basal ganglia — deep motor structures — lock onto external beats, synchronizing neural oscillations with the tempo of the music. This is why a steady rhythm can make Parkinson’s patients walk more steadily. Studies show rhythmic auditory cues can reduce gait freezing and even improve balance.

2. Music and Cognition

Music is also a workout for the brain’s executive and memory systems. Every child who learns an instrument trains attention, working memory, and coordination simultaneously. Neuroimaging shows that professional musicians have enlarged connections between the two hemispheres (via the corpus callosum) and enhanced activity in motor and auditory cortices.

Even passive listening strengthens associative memory. A melody heard during a joyful moment becomes a cue for that emotion later — a principle now used in dementia therapy. Alzheimer’s patients who cannot recall family names can often still sing entire songs from youth. The reason: musical memory is stored diffusely across cortical and subcortical regions, some of which are spared by neurodegenerative processes.

Proust’s fictional Vinteuil Sonata was not fantasy — it was neuropsychological realism before its time.

3. Music and Physiology

Music modulates the body as surely as it does the mind. Heart rate, respiration, blood pressure, and even hormone levels entrain to tempo and tone. Slow, low-frequency music encourages parasympathetic (rest-and-digest) dominance; fast, high-pitched music raises arousal.

Clinical research shows music can reduce cortisol and noradrenaline levels in stressed patients, lower blood pressure, and even reduce perceived pain by triggering endorphin release. In hospital wards, patients listening to calming instrumental music often require less anesthesia and report less postoperative pain.

From this perspective, music acts as a biochemical dialogue — vibrating air molecules speaking directly to cellular receptors through the mediating ear and nervous system.

⸻

The Historical Development of Musical Systems in East and West

Western Traditions

Western music traces its theoretical lineage to the Greeks, who first quantified sound. Pythagoras’ discovery that harmonious intervals correspond to simple numerical ratios (2:1 for the octave, 3:2 for the fifth) fused physics with philosophy — harmony as order.

Medieval Europe transformed this into spiritual art: Gregorian chant, sung in unison, aimed to reflect divine perfection. But soon came polyphony — multiple voices intertwining — and the rise of counterpoint. From Bach’s fugues to Mozart’s symphonies, the Western tradition evolved through tonal harmony, codified by mathematical tuning systems such as equal temperament.

Equal temperament divided the octave into twelve equal steps, sacrificing perfect purity for flexibility — a sonic metaphor for modernity itself: compromise in pursuit of universality. It allowed Bach’s Well-Tempered Clavier to roam through all keys, Beethoven to explode emotional range, and jazz to improvise freely.

Eastern Traditions

In India, the raga system developed in parallel, guided less by mathematics and more by metaphysics. A raga is not just a scale but a living entity — each with its own emotional essence, season, and time of day. Where Western harmony moves horizontally (progression), Indian raga explores vertical depth — microtonal nuance within a single tonal center.

Indian music theory speaks of 22 shrutis (microtones) within an octave — far more subtle gradations than the Western twelve-tone scale. The drone of the tanpura provides a sonic axis mundi, around which melody spirals like a planet around its sun.

In China, the pentatonic scale (five tones) formed the backbone of traditional music. Philosophers like Confucius saw music as moral order made audible; correct tuning symbolized social harmony. The twelve lü pitch pipes of the Zhou dynasty were used not only for music but for aligning the empire’s calendar — literally tuning society to the cosmos.

Across the Islamic world, the maqam system offered yet another model — modal scales using microtones and ornamentation to evoke precise emotional states. Its influence spread westward through Moorish Spain, shaping medieval European music.

Despite these differences, a common truth emerges: every culture treats sound as more than sensation — as a structure of meaning.

⸻

The Symbolic and Philosophical Meaning of Sound

From the Vedas to the Pythagoreans, sound has always been the metaphor of creation. In Hindu cosmology, the universe begins with Om — the primordial vibration. In ancient Greece, it was the Music of the Spheres, celestial harmony heard only by the soul. In Christianity, “In the beginning was the Word.”

These are not coincidences. All imply that existence itself is vibration — that to be is to resonate.

In the mystic traditions of Sufism, rhythm and chant (zikr, qawwali, sama) induce ecstatic states, bridging human and divine consciousness. Neuroscientists today might describe the same as synchronization of cortical oscillations and limbic arousal. Two languages, one reality.

In Taoism, silence and sound are complements, yin and yang. The guqin player seeks not virtuosity but emptiness between notes — the breath of the Dao. In West African drumming, polyrhythm symbolizes community: distinct voices interlock to form a coherent whole.

Whether through sacred chant or symphonic orchestra, humans use sound to mirror the cosmos, to find pattern in chaos, to say: we are part of a larger rhythm.

⸻

Case Studies and Evidence

1. Parkinson’s Patients and Rhythm Therapy – In controlled studies, rhythmic auditory stimulation improved walking speed and stride length by up to 25%. MRI data revealed increased dopamine activity in the putamen, restoring timing control.

2. Music and Immunity – Subjects exposed to relaxing classical music for 30 minutes showed elevated immunoglobulin A levels, indicating enhanced immune response.

3. Premature Infants – Lullabies sung by parents reduced infants’ heart rates and improved oxygen saturation, suggesting that gentle rhythm can regulate immature physiological systems.

4. Chronic Pain – Listening to preferred music for 20 minutes daily reduced perceived pain scores by 21% in fibromyalgia patients, likely through endorphin and dopamine release.

Each of these studies reinforces what Anna’s recovery revealed: music is not entertainment for the brain; it is nourishment for it.

⸻

Application of Sound in Modern Technologies and Therapy

Today, the same principles that heal through melody are being engineered into devices and protocols.

- Neurologic Music Therapy (NMT) is now used clinically to restore speech after stroke, improve gait in Parkinson’s, and enhance attention in traumatic brain injury.

- Focused Ultrasound Neuromodulation uses inaudible high-frequency sound to stimulate or calm specific brain regions without surgery.

- Binaural Beat Therapy explores using slightly offset frequencies in each ear to entrain desired brainwave states — for relaxation, sleep, or focus.

- Personalized Music Medicine integrates AI and biometric sensors to curate playlists based on heart rate and stress levels, dynamically adjusting tempo and key.

- Even diagnostic imaging — ultrasound, echocardiography, sonography — relies on sound to visualize the body’s hidden architecture.

In every case, vibration remains the messenger — from ancient drums to quantum ultrasound beams.

⸻

Conclusion: The Universe as Resonance

Anna’s hands still tremble slightly when she plays, but her music is stronger than before. On her latest scan, the damaged hemisphere shows faint activity — faint, but synchronized with its twin. When asked how she feels, she answers softly, “I don’t play the piano anymore. The piano plays me.”

Her words summarize the central truth of this exploration: sound is not outside us — we are made of it. Our heartbeat, our breathing, our neural oscillations all pulse in rhythm with the world. From the hum of atoms to the chorus of galaxies, everything vibrates.

Music, then, is not an invention. It is a recognition. It is the universe becoming self-aware through resonance.

As scientists, we can trace every waveform and chart every frequency; as poets, we can only listen and say, this is how meaning sounds.

And perhaps, in that listening, we heal — as Anna did — one note, one neuron, one heartbeat at a time.

⸻

Discover more from RETHINK! SEEK THE BRIGHT SIDE

Subscribe to get the latest posts sent to your email.